Aug Here we will know the basic knowledge about nearest neighbour classifier by predicting the label of image. We can train images with a suitable. Supervised neighbors -based learning comes in two flavors: classification for data with discrete labels, and regression for data with continuous labels.

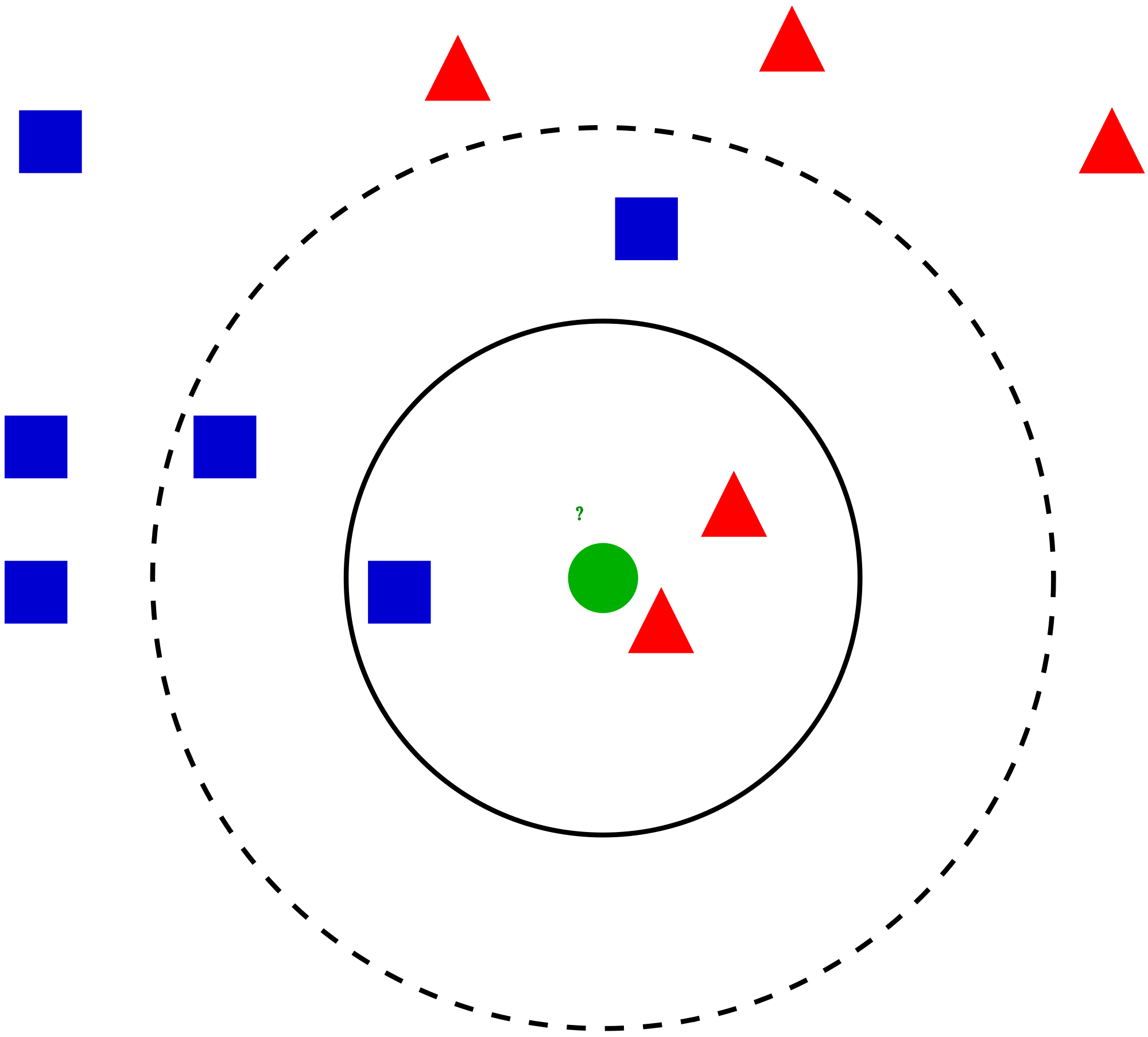

Nearest Neighbour Classifier. K nearest neighbors is a simple algorithm that stores all available cases and classifies new cases based on a similarity measure (e.g., distance functions). May An object is classified by a majority vote of its neighbors, with the object being assigned to the class most common among its k nearest neighbors.

Aug In KNN, K is the number of nearest neighbors. K is generally an odd number if the number of. Different distance measures. Some practical aspects.

Memorizes entire training data and performs classification only if attributes of record match one of the training examples exactly. The basic algorithm. Just focus on the. KNN is extremely easy to implement in its most basic form, and yet performs quite complex classification tasks.

Classification rule: For a test input x, assign the most common label amongst its k. It is a lazy learning algorithm since it. In addition, the KNN classifier is sensitive to the user defined parameter (K). It is one of the most widely used algorithm for classification problems. Local nearest neighbour classification with applications to.

Jul We derive a new asymptotic expansion for the global excess risk of a local-k- nearest neighbour classifier, where the choice of k may depend. K- nearest neighbor (KNN) algorithm works and how to run k- nearest neighbor in R. KNN algorithm is one of the simplest classification. As a comparison, we also show the classification. Izabela Moise, Evangelos Pournaras, Dirk Helbing.

Jul Learn about algorithms implemented in Intel(R) Data Analytics Acceleration Library. Variables In Input Data. When tested with a new example, it looks through the.

Decision tree and rule-based classifiers are designed to. We propose a trivial NN-based classifier – NBNN. Train a KNN classification model with scikit-learn.

KNN is one of the simplest machine learning algorithms, in terms of both. Without another expensive survey, can we guess what the classification of this new tissue is? Determine parameter K = number of nearest neighbors.

For kNN classification, one seeks a linear transformation such that nearest neighbors computed from the distances in Eq. In both uses, the input consists of the k closest training.

Arguably the most obvious defect with the k- nearest neighbour classifier is that it places equal weight on the class labels of each of the k nearest neighbours to the. Amazon SageMaker k- nearest neighbors (k-NN) algorithm is an index-based algorithm.

It uses a non-parametric method for classification or regression.

No comments:

Post a Comment

Note: only a member of this blog may post a comment.